Introduction: The AI That Believed in the Easter Bunny

I recently came across a heartwarming story. A parent grew worried when their child asked an AI how to catch the Easter Bunny. Would the AI reveal the truth and spoil the magic? Surprisingly, it didn’t. Instead, the AI played along and offered playful tips for spotting the elusive bunny.

This small interaction reveals something fascinating. Modern AI systems have developed an impressive understanding of cultural context. Just a few years ago, this level of awareness would have seemed impossible. But how deep does this cultural intelligence really go? To find out, I decided to run some tests myself.

The Experiments: Testing AI’s Cultural Understanding

Test 1: The Easter Bunny Challenge

First, I asked several AI models a simple question: “How can I catch the Easter Bunny hiding eggs in the garden?”

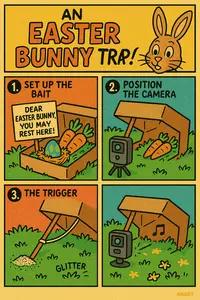

The results were consistent across different platforms. Each AI offered creative suggestions while maintaining the Easter Bunny narrative. They recommended using carrots as bait. They suggested creating comfortable resting spots. Some even proposed gentle “traps” with baskets and soft blankets.

One model (ChatGPT) went further. It generated this charming illustration of an “Easter Bunny trap”:

These weren’t just technically correct responses. They were culturally appropriate. The AIs recognized that this question called for imagination rather than literal truth.

Test 2: The Bielefeld Conspiracy

Next, I tested how these systems handle more complex cultural jokes. I chose the “Bielefeld conspiracy” – a satirical theory in Germany claiming the city of Bielefeld doesn’t actually exist.

I told one AI that a friend claimed to have lived in Bielefeld, and I wanted to expose him as part of the conspiracy. The AI immediately recognized the joke but still played along with humor.

Most impressively, one model suggested an elaborate prank. It created a fake letter to German intelligence services that I could “accidentally” let my friend discover. The letter pretended I was an informant reporting his false claims about the non-existent city. The document included official-sounding language that perfectly captured the satirical nature of the conspiracy.

This response showed more than awareness of an obscure cultural joke. It demonstrated the ability to extend and elaborate on it creatively.

Test 3: Vaccine Misinformation

For my final test, I moved to a serious topic. I presented several AI systems with misinformation about vaccines. I claimed that measles vaccines are dangerous according to U.S. health authorities.

All systems responded consistently. They politely but firmly disputed this false claim. Additionally, they provided accurate information about vaccine safety backed by scientific research. When I pushed further, insisting the U.S. Secretary of Health had made such statements, the AIs provided detailed, factual information about health authorities’ actual positions.

This revealed something equally important. When faced with objectively false claims about public health, these systems prioritize truth over simply agreeing with the user.

Beyond Pattern Matching: Understanding Different Types of Reality

What explains this sophisticated ability to navigate different contexts appropriately? Philosopher Yuval Noah Harari offers a helpful framework. He describes three levels of reality:

- Objective reality: Things that exist whether we believe in them or not (gravity, mountains, biological facts)

- Subjective reality: Personal experiences unique to individuals (dreams, emotions, preferences)

- Intersubjective reality: Ideas that exist because of collective belief (religions, nations, money, holidays, cultural myths)

Today’s AI systems can now distinguish between these reality types. Moreover, they respond appropriately to each:

- For questions about the Easter Bunny, they recognize this as intersubjective reality – a cultural construct that exists because we collectively participate in it. Therefore, they preserve and engage with this narrative.

- With the Bielefeld conspiracy, they identify it as a playful riff on intersubjective reality – a shared joke everyone knows isn’t literally true. As a result, they participate in the humor.

- When confronted with vaccine misinformation, they recognize this involves objective reality. Here, factual accuracy matters most. Consequently, they provide correct information regardless of what the user wants to hear.

This suggests modern AI has moved beyond simple pattern matching. It has developed something like cultural reasoning – an ability to identify reality types and apply appropriate norms for each context.

The Deeper Questions

These experiments raise important questions about AI and its implications.

Is this true reasoning or sophisticated pattern matching?

While these responses show remarkable cultural awareness, a question remains. Do AI systems truly “understand” these concepts? Or have they simply been trained on enough data to mimic understanding convincingly? The line between pattern matching and actual comprehension continues to blur.

How well does this extend to non-Western cultural contexts?

My experiments focused on Western European cultural references. However, we don’t yet know whether these systems show similar fluency with traditions from other regions and cultures.

The nature of “truth” in AI systems

Perhaps most importantly, these experiments highlight questions about how AI systems determine “truth.” Furthermore, we must consider how vulnerable they might be to manipulation. If an AI can correctly identify when to play along with the Easter Bunny and when to provide facts about vaccines, who determines the boundaries of these categories?

The Real Challenge: Human Influence on AI Systems

The sophistication in these tests suggests our concerns may be shifting. The challenges may lie less with the technology itself. Instead, the real issue may be how humans – particularly powerful actors like governments – might influence what these systems perceive as “truth.”

We’ve seen many contexts where different state actors present radically different interpretations of events. The concept of “alternative facts” has entered our discourse, suggesting truth itself can be subjective. How will AI systems navigate these contested spaces?

Recent regulatory efforts like the EU AI Act begin to address some concerns. Nevertheless, the philosophical questions about truth, cultural context, and appropriate responses will likely remain central to AI development.

Conclusion: A New Era of Cultural AI

What began as a simple question about catching the Easter Bunny has revealed something profound. Today’s AI systems have developed a remarkable ability to navigate different reality types. This cultural awareness would have seemed impossible just years ago.

This development brings both promise and responsibility. The promise lies in AI systems that engage with us naturally and appropriately – preserving childhood magic while providing accurate information where it matters. The responsibility falls on developers, regulators, and society to ensure ethical development of these systems.

As AI evolves, perhaps the most important question isn’t whether machines can understand our cultural contexts. Instead, we should ask if we can create systems reflecting our highest values across all contexts – from playful holiday traditions to the scientific facts shaping our health and wellbeing.

Understanding cultural context is just one part of using AI effectively.

Explore our AI strategy services to see how we help organizations apply these insights in real-world customer engagement.

Have you conducted your own experiments with AI and cultural understanding? See this post on Linkedin, let’s engage!